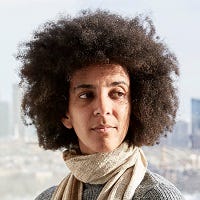

AI Ethicist Fired from Google Pursues AI Ethics With her Own Launch

Timnit Gebru, the once AI ethicist at Google who separated over a dispute over her research into bias in large language models, has launched her own organization - Distributed AI Research.

By John P. Desmond, Editor, AI in Business

Google separated from its AI ethicist Timnit Gebru in December 2020, and later her boss Margaret Mitchell, but the ethical issues Gebru identified remain. She has started her own organization – Distributed Artificial Intelligence Research (DAIR) – to continue her AI research focus and has won corporate backing to get it off the ground.

DAIR has received $3.7 million in funding from the MacArthur Foundation, Ford Foundation, Kapor Center, Open Society Foundation and the Rockefeller Foundation, according to an account in the Washington Post.

“I’ve been frustrated for a long time about the incentive structures that we have in place and how none of them seem to be appropriate for the kind of work I want to do,” Gebru stated.

Her intent is to form a research group outside of corporate or military influence that tries to prevent harm from AI systems by focusing on global perspectives and underrepresented groups. The field of AI is dominated by large companies with access to the vast amounts of data and computing power needed to execute applied AI systems.

Gebru argues that the trend makes AI less accessible to groups who risk being hurt by it, and the big tech companies in AI are free to ignore issues related to bias and negative outcomes, and as in the case of Gebru, silence some of those asking questions.

“AI is not inevitable, its harms are preventable, and when its production and deployment include diverse perspectives and deliberate processes, it can be beneficial,” states the vision statement from DAIR. Gebru will serve as the group’s executive director.

Seeks to Focus on Impact of Social Media Efforts on Dangerous Content

Gebru is Eritrean and fled Ethiopia in her teens during a war between the two countries. She wants to research the impact of social media in regions where little effort is place on preventing and removing dangerous content. Social media platforms have underinvested in “languages and places that are considered not important,” she stated in an account from BloombergQuint.

DAIR joins a group of independent institutes that include Data & Society, the Algorithmic Justice League, and Data for Black Lives. Her plan is to influence AI policies and practices inside Big Tech companies such as Google from the outside.

DAIR’s advisory committee includes: Safiya Noble, a recent recipient of the MacArthur Genius grant and author of the book, Algorithms of Oppression; and Ciira wa Maina, co-founder of Data Science Africa, who has researched food security, climate change and conservation.

Gebru will continue to focus on Big Tech. “We’re always putting out fires. Before it was large language models, and now I’m looking at social media and what’s happening where I grew up,” she stated to The Washington Post, in reference to reports about Facebook’s role in stoking ethnic violence in Ethiopia.

The firing of Gebru and Mitchell has had repercussions from Google. In the spring, the ACM Conference for Fairness, Accountability, and Transparency (FAccT) decided to suspend its sponsorship relationship with Google, conference sponsorship co-chair and Boise State University assistant professor Michael Ekstrand confirmed to VentureBeat.

Gebru, a cofounder of FAccT, continues to work as part of a group advising on data and algorithm evaluation and as a program committee chair. Mitchell is a past program co-chair of the conference and a FAccT program committee member.

Scrutinized Large Language Models While at Google

Gebru ran into headwinds at Google around a paper she coauthored titled, “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” The authors questioned whether pretrained language models may disproportionately harm marginalized communities, and whether progress can really be measured by performance on benchmark tests. The paper also raised concerns about large language models’ potential for misuse.

An excerpt from the paper states, “If a large language model, endowed with hundreds of billions of parameters and trained on a very large dataset, can manipulate linguistic form well enough to cheat its way through tests meant to require language understanding, have we learned anything of value about how to build machine language understanding or have we been led down the garden path?”

DAIR plans to work on uses for AI unlikely to be developed elsewhere. One of the group’s current projects is to create a public data set of aerial imagery of South Africa to examine how the legacy of apartheid is still etched into land use. A preliminary analysis of the images showed that much vacant land developed between 2011 and 2017 was converted into wealthy residential neighborhoods.

A paper on that project will mark DAIR’s formal debut in academic AI research at this year’s NeurIPS, a prominent AI conference, according to the Wired account. DAIR’s first research fellow, Raesetje Sefala, who is based in South Africa, is lead author of the paper, which includes outside researchers.

DAIR advisory board member Noble, a professor at UCLA who researches how tech platforms shape society, stated that Gebru’s project is an example of the more inclusive research needed to make progress on understanding technology’s effects on society.

“Black women have been major contributors to helping us understand the harms of big tech and different kinds of technologies that are harmful to society, but we know the limits in corporate America and academia that Black women face,” stated Noble. “Timnit recognized harms at Google and tried to intervene but was massively unsupported—at a company that desperately needs that kind of insight.”

Read the source articles and information in the Washington Post, from BloombergQuint, and from VentureBeat.