AI Transparency Elusive, Opaque; Needs Defining

Despite limitations, transparency is likely to be the basis for policymakers, companies, civil society groups and the public to work together to engender trust in AI

By John P. Desmond, Editor, AI in Business

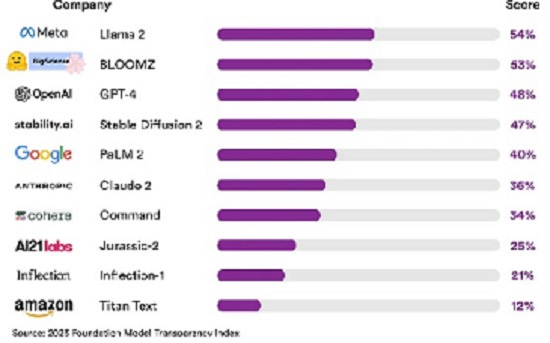

Top AI outfits were found to have failed an AI transparency test formulated by researchers at Stanford University that considers 100 factors.

The best score was earned by Meta’s Llama 2 model, scoring 54 percent, as in satisfying 54 of the 100 factors, according to an account of the research published this week in IEEE Spectrum. BloomZ from Hugging Face was second with 53 percent; Open AI’s ChatGPT scored 48 percent; and Amazon’s Titan Text scored 12 percent, the lowest score recorded.

Stanford’s Center for Research on Foundation Models performed the study.

In a blog post, the researchers stated, “No major foundation model developer is close to providing adequate transparency, revealing a fundamental lack of transparency in the AI industry.”

No so good. PhD candidate Rishi Bommasani, a project lead, said publication of the index may help turn what he sees as a troubling trend. “As the impact goes up, the transparency of these models and companies goes down,” he stated. For example, when OpenAI released GPT-4, succeeding GPT-3, the company decided to withhold all information about model size, hardware, training efforts and methods, and dataset construction.

The 100 metrics of transparency used by the researchers include: upstream factors related to training, information about the model’s properties and functions, and downstream factors regarding the model’s distribution and use. Kevin Klyman, a coauthor of the report, stated that when a new model is released, the supplier “has to be transparent about the resources that go into that model, and the evaluations of the capabilities of that model, and what happens after the release.”

Where training data comes from has become a hot topic in AI; several lawsuits allege that AI companies have included copyrighted material in their data sets. The Stanford study found that most companies are not forthcoming about the source of their data. The Bloomz model from Hugging Face earned the highest scores in this category, with 60 percent; no other models were above 40 percent; several got a zero.

Companies are also reluctant to disclose who the human workers are who refine their models and what wages they are paid. OpenAI uses reinforcement learning with human feedback, to tune the model on which responses are unacceptable. But concerns are rising that the work is outsourced to low-wage workers in developing countries. (See AI in Business, August 18, 2023, In the Trenches of Reinforcement Learning with Human Feedback,)

Bommasani stated, “Labor in AI is a habitually opaque topic, and here it’s very opaque, even beyond the norms we’ve seen in other areas.”

The researchers hope to update the study at least annually, and that policymakers around the world will consider it as they craft regulations around AI. ““If there’s pervasive opacity on labor and downstream impacts, this gives legislators some clarity that maybe they should consider these things,” Bommasani stated.

Transparency A Path for Regulation in US

While transparency may not be enough to provide AI accountability, it is the focus of members of US Congress considering how to regulate AI. Several speakers mentioned it at a May 16 hearing of the Senate Judiciary Subcommittee on the Oversight of AI.

For example, NYU Professor Gary Marcus stated, “Transparency is absolutely critical here to understand the political ramifications, the bias ramifications, and so forth. We need transparency about the data. We need to know more about how the models work. We need to have scientists have access to them,” according to an account of the hearing in TechPolicy Press.

The writer of the account, Elizabeth (Bit) Meehan, a political science PhD candidate at George Washington University, stated, “Transparency on its own – collecting and disseminating accurate, broad, and complete information about a system and its behaviors – will not be able to curb the harms of AI.” It will take a confluence of policymakers, companies, civil society groups working together, she suggested.

While the transparency approach to regulation has its flaws and limitations, it is expedient. “Policymakers don’t have many solutions as likely to find bipartisan support than transparency in their toolkit. It’s a familiar tool that appeals to a wide range of actors on the left and the right,” Meehan stated, while suggesting that transparency rules will require meaningful implementation and enforcement.

What It Is

Defining transparency is another required exercise. Researchers at Lund University in Sweden, tried in a recent paper, “Three Levels of AI Transparency.”

They are: algorithmic transparency, interaction transparency and social transparency. “All need to be considered to build trust in AI,” the authors stated. Within AI research, transparency has come to be mainly understood as transparency of the algorithm, closely related to the field of explainable AI. However, the approach has been criticized as “techno-centric.”

“We argue that this is a narrow conceptualization,” the authors state.” AI transparency should extend beyond the algorithm into the entire lifecycle of AI development and application, incorporating various stakeholders.”

The three levels of AI transparency - the system, the user and the social context - provide a framework to view transparency across these elements together. “This we believe, can serve as a roadmap to better organize and prioritize gaps in trustworthy AI research,” the authors state.

More research is needed on what users need to understand about AI and the role of interaction between users and AI agents. “User-centered research on AI transparency is limited,” the authors state. “Much remains to be learned about what the user needs with regards to AI transparency in various contexts.”

Read the source articles and information in IEEE Spectrum, in TechPolicy Press and in the paper, “Three Levels of AI Transparency” from Lund University, Sweden.

Click on the image to buy me a cup of coffee and support the production of the AI in Business newsletter. Thank you!