Big Tech Regulation Gets Serious with Fine of $1.3B to Meta by the EU

Time to take stock of AI regulation efforts and the state of AI ethics; AI ethicist Timnit Gebru sees LLMs as “perpetuating dominant viewpoints”

By John P. Desmond, Editor, AI in Business

The question of whether AI can be effectively regulated was answered in part this week when European Union regulators issued a $1.3 billion fine of Meta/Facebook, a punishment for transferring data from European users to the US in violation of past orders not to do so.

The European Union five years ago enacted the General Data Protection Regulation (GDPR) privacy law, under which the restriction was issued to Facebook in 2020. Meta has said it plans to appeal the fine, which came with a grace period of at least five months.

“The unprecedented fine is a strong signal to organizations that serious infringements have far-reaching consequences,” stated Andrea Jelinek, the chairwoman of the European Data Protection Board, the EU body that assessed the fine, in an account in The New York Times.

Europe is far ahead of the US when it comes to privacy and AI regulation, having proposed the Artificial Intelligence Act in 2021, which sought to designate some uses of AI as “high-risk” and would order stricter requirements for transparency, safety and human oversight. (See ChatGPT Spurring AI Regulation Efforts Worldwide, April 14, 2023 AI in Business.)

At the recent G7 Summit held in Hiroshima, Japan, world leaders called for international standards to regulate AI, which has been further mainstreamed by the release last fall of ChatGPT and other generative AI models since then. In a joint statement issued from the gathering, the leaders stated that international rules have “not necessarily kept pace” with the rapid growth of AI, according to an account in The Washington Post.

Jake Sullivan, US President Joe Biden’s national security adviser, stated at a G7 briefing, ”This is a topic that is very much seizing the attention of leaders of all of these key, advanced democratic market economies.”

He called the meeting “a good start” and encouraged international leaders to keep talking. “Leaders have tasked their teams to work together on what the right format would be for an international discussion around norms and standards going forward,” Sullivan stated.

A Number of Interactional Efforts to Regulate AI Being Tried

A number of international attempts to regulate AI have been and are being tried; a recent account in MIT Technology Review reviewed them.

The efforts include a legally binding AI treaty proposed by the Council of Europe, a human rights organization with 46 countries as members. The treaty requires that signers take steps to ensure that AI is designed, developed and applied in a way that protects human rights and the rule of law. It could impose moratoriums on technologies seen as a risk to human rights, such as facial recognition, according to the account.

The Council is scheduled to finish drafting the treaty text by November, according to Nathalie Smuha, a legal scholar and philosopher at the KU Leuven Faculty of Law who advises the council.

The pros of the effort were seen by the article authors to be a wide participation of countries; the cons were seen as a years-long effort to have each country individually ratify the treaty, then implement it in national law.

The EU’s AI Act was seen by the authors as being in the best position of all the efforts to have an impact. “Since it’s the only comprehensive AI regulation out there, the EU has a first-mover advantage,” the authors state; the act is poised to become the world’s de facto standard as companies wanting to do business in the EU adjust their practices to comply. Yet the act is controversial, and tech companies will lobby hard to water down its restrictions as it makes its way through the EU rule making process.

Ethicist Timnit Gebru Warned of LLM Dangers

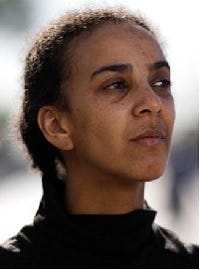

Meanwhile on the ethics front, Timnit Gebru, computer scientist and AI ethicist, separated from her position at Google in December of 2020 after a disagreement with management over publication of a paper warning of potential bias in LLMs, recently weighed in on the impact of ChatGPT and similar products in a video interview with The Guardian.

The paper Gebru co-authored, “On the Dangers of Stochastic Parrots,” focused on the risk that large language models would reflect the bias, hate speech and other wretched aspects of the World Wide Web it scrapes for its data.

“In accepting large amounts of web text as ‘representative’ of ‘all’ of humanity, we risk perpetuating dominant viewpoints, increasing power imbalances and further reifying inequality,” the authors stated in the paper.

After her departure, Gebru founded Dair, the Distributed AI Research Institute, to conduct AI research outside the realm of big tech. She works with a mix of professionals including social scientists, computer scientists and labor organizers. While in Kigali, Rwanda recently preparing to speak at the International Conference on Learning Representations, said to be the first AI industry gathering held in an African country, she conducted the Guardian interview.

Calling attention to AI work that goes on in developing countries, Gebru stated, “There’s a lot of exploitation in the field of AI, and we want to make that visible so that people know what’s wrong.” Also, “AI is not magic. There are a lot of people involved – humans.”

She sees the current thrashing over worries that AI will take over and wipe out humanity, as a distraction. “That conversation ascribes agency to a tool rather than the humans building the tool,” she stated. “That means you can abdicate responsibility: ‘It’s not me that’s the problem. It’s the tool. It’s super-powerful. We don’t know what it’s going to do.’ Well, no – it’s you that’s the problem. You’re building something with certain characteristics for your profit. That’s extremely distracting, and it takes the attention away from real harms and things that we need to do. Right now.”

Based on her experiences, she may be the most credible critic of AI on the scene right now.

Read the source material and info in The New York Times, The Washington Post, The Washington Post and The Guardian.

(Write to the editor here; tell him what you would like to read about in AI in Business.)