Machine Learning Model Management Bridges DevOps, MLOps and DataOps

As AI adoption accelerates, AI model management tools are emerging, creating a place where AI and ML teams can share knowledge, ensure good governance practices and speed time to deployment.

By John P. Desmond, Editor, AI in Business

With machine learning being implemented more widely as AI adoption accelerates, data scientists are confronting the need to manage multiple versions of ML models to keep AI operations running smoothly.

This is giving rise to model management tools that help to manage multiple experiments and dozens of parameters.

While open source and intuitive ML frameworks including Pytorch and Tensorflow have lowered the barrier for ML development, organizations that may not have the expertise to build the models themselves may use models made by others.

“Deploying and using ML models in a large enterprise involves a lot of moving parts. Doing it ad-hoc without any solid framework and pipeline in place can make ML development unwieldy,” stated Sumit Ghosh, a developer writing on the blog of Neptune.ai, a company based in Warsaw, Poland, offering a metadata store for MLOps.

Without a way to manage ML models, challenges include:

No record of experiments. Multiple data scientists are likely to be working on the same problem, each running their own set of experiments. It’s best not to duplicate work.

Insights lost along the way. “In a rapid iterative experimentation phase, an insight generated in an earlier experiment might be lost when the researcher moves on to the next iterations of that model.” A simple solution would be to keep detailed notes manually. “But no one does that because it’s manual and requires a ton of effort,” Ghosh stated.

Difficult to reproduce results. To reproduce a particular experiment, the developer needs to store the model code along with the hyperparameters and the dataset manually, for every iteration of the model. “This requires a ton of effort, so in practice, no one does it,” Ghosh stated.

Cannot search for or query models. Without solid version control and a metadata tracking system, it becomes “nearly impossible” to query past models for insights.

Difficult to collaborate. Once a candidate model is difficult, it is difficult to have other team members effectively review it. “This problem becomes progressively more challenging as the team size grows,” Ghosh stated. Tools that exist in the software development world need to be available for ML model development, he suggested.

Model Management Entrepreneur Suggests Models, Data and Code Are Three Legs

Manasi Vartak, an entrepreneur in the ML model management market, makes the case that DevOps, MLOps and DataOps need to be managed together for effective AI systems.

“While conventional software involves one thing—code—and not much else, intelligent software relies on a complex relationship between three interconnected variables or legs of a three-legged stool,” stated Vartak, the founder and CEO of Verta.ai, in a recent account she authored in DevOps.

The three legs of the stool are models, data and code, suggested Vartak, the founder and CEO of Verta, who was the originator of the ModelDB machine model versioning system while a student at MIT. ModelDB is now a leading open source system.

An AI/ML model may consist of linear regressions, rules and deep neural networks trained to recognize patterns in data and make decisions. “That’s what makes an application ‘intelligent,’ “ Vartak stated.

A model is usually trained on historical data and programmed to emulate it; thus, the application’s behavior depends heavily on the data, the raw input data and new data.

The code is the language an application uses to function. “When used in the context of intelligent applications, the code pillar might refer to business logic, calls to models, receiving outputs, decision-making and calls to other data systems,” she stated.

“Regardless of application type, the legs that intelligent applications stand on are always the same: Model, data and code. And if you try to remove any one of them, the application will topple over,” she stated.

MLOps and DevOps Each Have Their Own Engineers

MLOps and DevOps engineers also service different audiences. The primary users of models are data scientists while DevOps usually serves software developers. MLOps engineers typically come from a background in ML and data science, or they were software engineers who picked up ML.

Making it more challenging, “No single all-inclusive MLOps platform exists,” Vartak stated. “Rather, different tools support different individual steps in the model life cycle .. the ecosystem is still somewhat fragmented.”

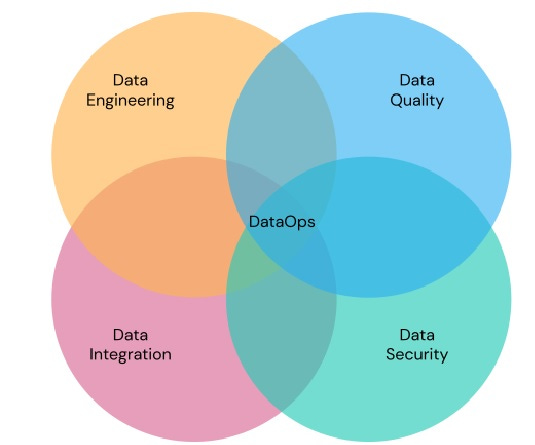

She sees DataOps tools as being in an earlier stage. “When it comes to DataOps, the life cycle is still being defined,” she stated. The overall objective is still to use processes and to enable high-quality data to be shipped frequently, “which requires a combination of data engineering, data quality, data security and data integration.”

In an interview with AI in Business, Vartak said the Verta platform provides a catalog for models, “a central place to version all the work you have done and a place where your ML and AI teams can share knowledge.” With the Great Resignation leading to more frequent turnover of engineers, keeping track of work that has been done on the data science team is critical. “We provide a way to do that,” she said.

Verta is also used to track adherence to AI governance practices in place at a company, such as explainability and ethical considerations. Larger accounts usually pay by the seat for the platform; smaller companies pay for the service as they go, she said.

One customer is Scribd, a digital library for audiobooks, ebooks, podcasts, magazines, documents and slide presentations. The company uses machine learning to optimize search, make recommendations and improve new features.

In a case history on the Verta website, Qingping Hu, Principal Engineering, Platform Engineering for Scribd, stated, “We didn't’ really have a consistent way to keep track of these models – how many models we had in production or what types of models they were.”

Using the Verta platform, the company was able to reduce the time to delivery of a new model from 30 days to less than a day, Vartak stated, adding, “That’s typical of the type of gains we can provide. We help streamline the whole process and provide the infrastructure so that for a company to add AI into their products has become super, super easy.”

Verta has raised $10 million to date; investors include Intel Capital and General Catalyst. The plan for now is to grow the company. “We are in the wave of intelligent software, and that wave will keep building. We’ll be growing a lot. It's a fun time,” she said.

Read the source articles and information on the blog of Neptune.ai and in DevOps.

(Write to the editor here.)