OpenAI’s Ilya Sutskever Is Concerned that AI Could Get Out of Control

The OpenAI cofounder voted to remove Sam Altman as OpenAI CEO, then changed his mind and supported Altman’s return; the core issue was his concern about AI superintelligence

By John P. Desmond, Editor, AI in Business

Ilya Sutskever, an OpenAI cofounder and chief scientist before the latest management shakeup, changed his vote on whether Sam Altman should be ousted from OpenAI’s board, first voting to oust Altman, then changing his vote to restore Altman as CEO.

The conflict can best be understood as a collision of two camps: one concerned that AI could get out of control, and one that sees commercial success for OpenAI as the primary path to pursue.

The decision to oust Altman came at a November 18 meeting of four OpenAI board members; Sutskever and Adam D’Angelo, chief executive of the question-and-answer site Quora; Tasha McCauley, an adjunct senior management scientist at the RAND Corporation; and Helen Toner, director of strategy and foundational research grants at Georgetown University’s Center for Security and Emerging Technology, according to an account in The New York Times. McCauley and Toner were removed from the board in the shakeup that coincided with Altman’s return; D’Angelo survived.

The Times reported that McCauley and Toner “have ties to the Rationalist and Effective Altruist movements, a community that is deeply concerned that AI could one day destroy humanity.”

The Times described Sutskever as “increasingly aligned with those beliefs.” He was born in the Soviet Union, grew up in Israel, immigrated to Canada as a teenager and as a graduate student at the University of Toronto, worked on neural network AI technology with Geoffrey Hinton, the AI scientist who went public with his own fears about AI technology earlier this year.

Sutskever Has Expressed Reservations About the Power of AI

Sutskever expressed his reservations about the power of AI in an interview with MIT Technology Review earlier this year. He worked at Google for a short time and in 2014, he was recruited to become a cofounder of OpenAI, with Altman, Elon Musk, Peter Thiel, Microsoft, Y Combinator and others. The company was said to have its sights on developing artificial general intelligence, systems with intelligence equal to or surpassing humans.

The AI scientist worked on Open AI’s GPT large language models, from the first appearance in 2016, and he worked on the DALL-E text-to image model, extending functionality into areas not seen before. The release of ChatGPT in November 2022 changed the game in AI. In less than two months, ChatGPT had 100 million users.

Aaron Levie, CEO of the storage firm Box, tweeted after the launch, “ChatGPT is one of those rare moments in technology where you see a glimmer of how everything is going to be different going forward,” according to the Times account.

With ChatGPT rolled out, AGI started to look more like a possibility. This worried Sutskever. “AGI is not meant to be a scientific term,” he stated in the TImes interview. “It’s meant to be a useful threshold, a point of reference.”

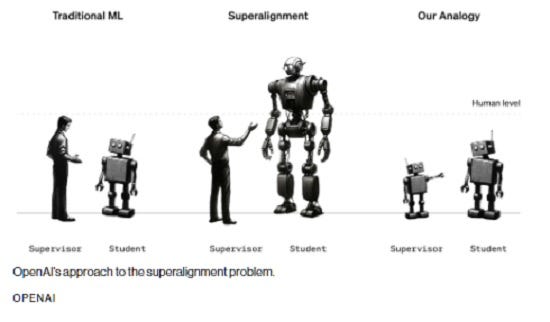

Sutskever began to be concerned that machine could outmatch human smarts, in what he calls artificial superintelligence. “They’ll see things more deeply. They’ll see things we don’t see,” he stated. He formed a team at OpenAI with fellow scientist Jan Leike to focus on “superalignment,” which means allowing AI models to do what the human engineers want and nothing more. The goal was to arrive a fail-safe procedures for controlling AI, a problem the scientists believed they could solve, in time.

“Existing alignment methods won’t work for models smarter than humans because they fundamentally assume that humans can reliably evaluate what AI systems are doing,” stated Leike to the Times. “As AI systems become more capable, they will take on harder tasks,” which will make it more difficult for humans to assess them. “In forming the superalignment team with Ilya, we’ve set out to solve these future alignment challenges,” Leike stated.

Sutskever stated, “It’s obviously important that any superintelligence anyone builds does not go rogue. Obviously.”

The day after the vote to oust Altman, Sutskever stated the removal had been necessary “to make sure that OpenAI builds AGI that benefits all of humanity,” according to an account in Platformer. On Monday, Susteveker apologized for his role in the coup and expressed his desire to reinstate Altman to his role. “Now that he’s had some time to read the room, apparently, he’s changed his mind,” stated Casey Newton, the founder and editor of Platformer and author of the account.

OpenAI was founded as a nonprofit with a for-profit entity underneath it. “While the membership of the board has changed over the years, its makeup has always reflected a mix of public-benefit and corporate interests. At the time of Altman’s firing, though, it had arguably skewed away from the latter,” Newton stated.

Female Effective Altruists Moved Off OpenAI’s Board

Toner and McCauley had worked in the effective altruism movement, which seeks to have philanthropic investment do the most good possible, but the reputation of the movement suffered last year when one of its famous proponents, Sam Bankman-Fried, was found guilty of criminal fraud and faces years in prison.

According to a recent account in The Atlantic, OpenAI launched ChatGPT a year ago out of fear Anthropic would beat them to the market. The for-profit arm of the company sought to extend its lead over competitors. At its most recent developer conference, OpenAI announced custom chatbots that represent a step toward agents that can perform higher-level tasks. “Agents are a cop concern of AI safety researchers — and their release reportedly infuriated Sutskever,” Newton stated.

The new OpenAI board replaces the two ousted females with males, Bret Taylor and Larry Summers. Taylor, the new chairman of the OpenAI board, is a former co-CEO of Salesforce, sharing the role with Marc Benioff between November 2021 and January 2023, according to an account in tech.co. He also served as chairman of the board of Twitter, until Elon Musk bought the company and replaced the board. He also has worked at Google, where as a programmer he was involved in the development of Google Maps.

Larry Summers is an economist whose career includes government and university president roles. He was US Secretary of the Treasury under Bill Clinton between 1999 and 2001, and he served as president of Harvard University from 2001 to 2006.

Altman and Greg Brockman decided not to retain seats on OpenAI’s board. Brockman was an OpenAI cofounder who followed Altman out the door, then decided to return.

In his first public act as chairman, Taylor announced that Microsoft would have a non-voting seat on the OpenAI board. Microsoft has invested some $13 billion in OpenAI and owns 49 percent of its shares.

Altman has stated that the company plans to add to the current board, in an effort to increase its diversity.

Sutskever still lists himself as chief scientist at OpenAI, but he is no longer on the board. According to a December 11 account on Yahoo!Finance based on a story in Business Insider, Sutskever was “in a state of limbo” at the company, whose leadership had not yet decided on his future. Meanwhile, Elon Musk issued Sutskever an invitation to work with him, possibly at his startup, xAI.

Musk and Alta convinced Sutskever to leave Google in 2015 to become a cofounder of OpenAI. Musk left the OpenAI board in 2018 after it spurned his plan to take over the company. Musk has called Sutskever the “linchpin” of OpenAI’s success.

OpenAI Yesterday Announced a Commitment to Superalignment Team

In a major development on this topic yesterday, OpenAI announced its commitment to the superalignment team. Members of the team take the eventual superiority of AI over human thinking as a given. “AI progress in the last few years has been just extraordinarily rapid,” stated Leopold Aschenbrenner, a researcher on OpenAI’s superalignment team, in an account published yesterday in MIT Technology Review. “We’ve been crushing all the benchmarks, and that progress is continuing unabated.”

He added, “We’re going to have superhuman models, models that are much smarter than us. And that presents fundamental new technical challenges.”

Superhuman machines do not yet exist, which makes the problem difficult to study. The OpenAI researchers used stand-ins, looking at how GPT-2, a model released five years ago, could supervise GPT-4, the latest and most powerful model. “If you can do that, it might be evidence that you can use similar techniques to have humans supervise superhuman models,” stated Collin Burns, another researcher on the superalignment team.

The approach is viewed as promising and needing more work. To recruit more researchers to the cause, OpenAI yesterday announced the availability of “Superalignment Fast Grants,” with an application deadline of February 18, Grants of up to $2 million will be awarded to university labs, nonprofits and individual researchers. In addition, OpenAI will award one-year fellowships of $150,000 to selected graduate students.

“We really think there’s a lot that new researchers can contribute,” stated Aschenbrenner. Hopefully, an AI ethicist or two will apply.

Read the source articles and information in The New York Times, in MIT Technology Review from October 26, in Platformer, in The Atlantic, in tech.co, and in MIT Technology Review from December 14.

Click on the image to buy me a cup of coffee and support the production of the AI in Business newsletter. Thank you!